How to define a summary evaluator

Some metrics can only be defined on the entire experiment level as opposed to the individual runs of the experiment.

For example, you may want to compute the overall pass rate or f1 score of your evaluation target across all examples in the dataset.

These are called summary_evaluators. Instead of taking in a single Run and Example, these evaluators take a list of each.

Below, we'll implement a very simple summary evaluator that computes overall pass rate:

- Python

- TypeScript

def pass_50(outputs: list[dict], reference_outputs: list[dict]) -> bool:

"""Pass if >50% of all results are correct."""

correct = sum([out["class"] == ref["label"] for out, ref in zip(outputs, reference_outputs)])

return correct / len(outputs) > 0.5

function summaryEval({ outputs, referenceOutputs }: { outputs: Record<string, any>[], referenceOutputs?: Record<string, any>[]}) {

let correct = 0;

for (let i = 0; i < outputs.length; i++) {

if (outputs[i]["output"] === referenceOutputs[i]["label"]) {

correct += 1;

}

}

return { key: "pass", score: correct / outputs.length > 0.5 };

}

You can then pass this evaluator to the evaluate method as follows:

- Python

- TypeScript

from langsmith import Client

ls_client = Client()

dataset = ls_client.clone_public_dataset(

"https://smith.langchain.com/public/3d6831e6-1680-4c88-94df-618c8e01fc55/d

)

def bad_classifier(inputs: dict) -> dict:

return {"class": "Not toxic"}

def correct(outputs: dict, reference_outputs: dict) -> bool:

"""Row-level correctness evaluator."""

return outputs["class"] == reference_outputs["label"]

results = ls_client.evaluate(

bad_classified,

data=dataset,

evaluators=[correct],

summary_evaluators=[pass_50],

)

import { Client } from "langsmith";

import { evaluate } from "langsmith/evaluation";

import type { EvaluationResult } from "langsmith/evaluation";

const client = new Client();

const datasetName = "Toxic queries";

const dataset = await client.clonePublicDataset(

"https://smith.langchain.com/public/3d6831e6-1680-4c88-94df-618c8e01fc55/d,

{ datasetName: datasetName }

);

function correct({ outputs, referenceOutputs }: { outputs: Record<string, any>, referenceOutputs?: Record<string, any> }): EvaluationResult {

const score = outputs["class"] === referenceOutputs?["label"];

return { key: "correct", score };

}

function badClassifier(inputs: Record<string, any>): { class: string } {

return { class: "Not toxic" };

}

await evaluate(badClassifier, {

data: datasetName,

evaluators: [correct],

summaryEvaluators: [summaryEval],

experimentPrefix: "Toxic Queries",

});

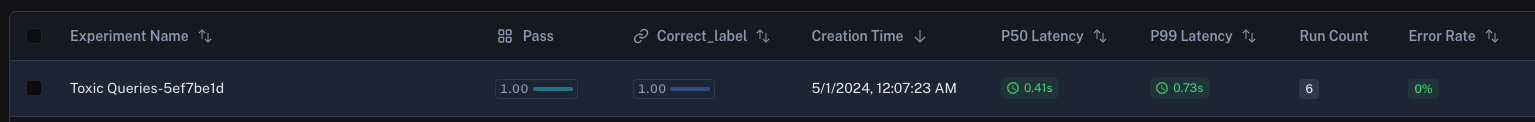

In the LangSmith UI, you'll the summary evaluator's score displayed with the corresponding key.